- State the null and alternative Hypothesis

- Identify a test statistic

- Analyze sample data

- Interpret results and reject or fail to reject null hypothesis

Monthly Archives: February 2024

Oracle Cloud Infrastructure: Data Management Tools

Oracle Cloud Infrastructure (OCI) offers a range of data management tools and services to help organizations store, process, analyze, and manage their data. Here are some key Oracle Cloud Infrastructure data management tools and services:

- Oracle Autonomous Database: Oracle Autonomous Database is a fully managed, self-driving database service that eliminates the complexity of database administration tasks such as provisioning, patching, tuning, and backups. It supports both transactional and analytical workloads and offers high availability, scalability, and security.

- Oracle Cloud Object Storage: Oracle Cloud Object Storage is a scalable and durable object storage service that allows organizations to store and retrieve large amounts of unstructured data. It offers flexible storage tiers, including Standard, Archive, and Deep Archive, with configurable data durability and availability.

- Oracle MySQL Database Service: Oracle MySQL Database Service is a fully managed MySQL database service that offers high availability, scalability, and security. It automates administrative tasks such as provisioning, patching, and backups, allowing organizations to focus on their applications.

- Oracle Database Exadata Cloud Service: Oracle Database Exadata Cloud Service is a fully managed database service that is optimized for running Oracle Database workloads. It offers the performance, scalability, and reliability of Oracle Exadata infrastructure, along with automated management and monitoring capabilities.

- Oracle Big Data Service: Oracle Big Data Service is a cloud-based platform for running and managing big data and analytics workloads. It provides support for popular big data frameworks such as Hadoop, Spark, and Kafka, along with integration with Oracle Database and other Oracle Cloud services.

- Oracle Data Integration Platform: Oracle Data Integration Platform is a comprehensive data integration platform that enables organizations to extract, transform, and load (ETL) data across heterogeneous systems. It provides support for batch and real-time data integration, data quality management, and metadata management.

- Oracle Analytics Cloud: Oracle Analytics Cloud is a cloud-based analytics platform that enables organizations to analyze and visualize data from various sources. It offers self-service analytics tools for business users, along with advanced analytics and machine learning capabilities for data scientists.

- Oracle Data Safe: Oracle Data Safe is a cloud-based security and compliance service that helps organizations protect sensitive data in Oracle Databases. It provides features such as data discovery, data masking, activity auditing, and security assessments to help organizations meet regulatory requirements and secure their data.

- Oracle Cloud Infrastructure Data Flow: Oracle Cloud Infrastructure Data Flow is a fully managed service for running Apache Spark applications at scale. It provides a serverless, pay-per-use environment for processing large datasets using Apache Spark, along with integration with other Oracle Cloud services.

These are just a few examples of the data management tools and services available on Oracle Cloud Infrastructure. Depending on specific requirements and use cases, organizations can leverage OCI’s comprehensive portfolio of data services to meet their data management needs.

IBM Cloud: Data Management Tools

IBM Cloud offers a variety of data management tools and services to help organizations store, process, analyze, and manage their data. Here are some key IBM Cloud data management tools and services:

- IBM Db2 on Cloud: IBM Db2 on Cloud is a fully managed, cloud-based relational database service that offers high availability, scalability, and security. It supports both transactional and analytical workloads and provides features such as automated backups, encryption, and disaster recovery.

- IBM Cloud Object Storage: IBM Cloud Object Storage is a scalable and durable object storage service that allows organizations to store and retrieve large amounts of unstructured data. It offers flexible storage classes, including Standard, Vault, and Cold Vault, with configurable data durability and availability.

- IBM Cloudant: IBM Cloudant is a fully managed NoSQL database service based on Apache CouchDB that is optimized for web and mobile applications. It offers low-latency data access, automatic sharding, full-text search, and built-in replication for high availability and data durability.

- IBM Watson Studio: IBM Watson Studio is an integrated development environment (IDE) that enables organizations to build, train, and deploy machine learning models and AI applications. It provides tools for data preparation, model development, collaboration, and deployment, along with built-in integration with popular data sources and services.

- IBM Watson Discovery: IBM Watson Discovery is a cognitive search and content analytics platform that enables organizations to extract insights from unstructured data. It offers natural language processing (NLP), entity extraction, sentiment analysis, and relevancy ranking to help users discover and explore large volumes of textual data.

- IBM Cloud Pak for Data: IBM Cloud Pak for Data is an integrated data and AI platform that provides a unified environment for collecting, organizing, analyzing, and infusing AI into data-driven applications. It includes tools for data integration, data governance, business intelligence, and machine learning, along with built-in support for hybrid and multi-cloud deployments.

- IBM InfoSphere Information Server: IBM InfoSphere Information Server is a data integration platform that helps organizations understand, cleanse, transform, and deliver data across heterogeneous systems. It offers capabilities for data profiling, data quality management, metadata management, and data lineage tracking.

- IBM Db2 Warehouse: IBM Db2 Warehouse is a cloud-based data warehouse service that offers high performance, scalability, and concurrency for analytics workloads. It supports both relational and columnar storage, in-memory processing, and integration with IBM Watson Studio for advanced analytics and AI.

- IBM Cloud Pak for Integration: IBM Cloud Pak for Integration is a hybrid integration platform that enables organizations to connect applications, data, and services across on-premises and cloud environments. It provides tools for API management, messaging, event streaming, and data integration, along with built-in support for containers and Kubernetes.

These are just a few examples of the data management tools and services available on IBM Cloud. Depending on specific requirements and use cases, organizations can leverage IBM Cloud’s comprehensive portfolio of data services to meet their data management needs.

Google Cloud Platform (GCP): Data Management Tools

Google Cloud Platform (GCP) provides a range of data management tools and services to help organizations store, process, analyze, and visualize their data. Here are some key Google Cloud data management tools and services:

- Google Cloud Storage: Google Cloud Storage is a scalable object storage service that allows organizations to store and retrieve data in the cloud. It offers multiple storage classes for different use cases, including Standard, Nearline, Coldline, and Archive, with varying performance and cost characteristics.

- Google BigQuery: Google BigQuery is a fully managed, serverless data warehouse service that enables organizations to analyze large datasets using SQL queries. It offers high performance, scalability, and built-in machine learning capabilities for advanced analytics and data exploration.

- Google Cloud Firestore and Cloud Bigtable: Google Cloud Firestore is a scalable, fully managed NoSQL document database service for building serverless applications, while Cloud Bigtable is a highly scalable NoSQL database service for real-time analytics and IoT applications. Both services offer low-latency data access and automatic scaling.

- Google Cloud SQL: Google Cloud SQL is a fully managed relational database service that supports MySQL, PostgreSQL, and SQL Server. It automates backups, replication, patch management, and scaling, allowing organizations to focus on their applications instead of database administration.

- Google Cloud Spanner: Google Cloud Spanner is a globally distributed, horizontally scalable relational database service that offers strong consistency and high availability. It is suitable for mission-critical applications that require ACID transactions and global scale.

- Google Cloud Dataflow: Google Cloud Dataflow is a fully managed stream and batch processing service that allows organizations to process and analyze data in real-time. It offers a unified programming model based on Apache Beam for building data pipelines that can scale dynamically with demand.

- Google Cloud Dataproc: Google Cloud Dataproc is a fully managed Apache Hadoop and Apache Spark service that enables organizations to run big data processing and analytics workloads in the cloud. It offers automatic cluster provisioning, scaling, and management, along with integration with other GCP services.

- Google Cloud Pub/Sub: Google Cloud Pub/Sub is a fully managed messaging service that allows organizations to ingest and process event streams at scale. It offers reliable message delivery, low-latency message ingestion, and seamless integration with other GCP services.

- Google Data Studio: Google Data Studio is a free, fully customizable data visualization and reporting tool that allows organizations to create interactive dashboards and reports from various data sources. It offers drag-and-drop functionality and real-time collaboration features.

These are just a few examples of the data management tools and services available on Google Cloud Platform. Depending on specific requirements and use cases, organizations can leverage GCP’s comprehensive portfolio of data services to meet their data management needs.

Azure: Data Management Tools

Microsoft Azure offers a comprehensive suite of data management tools and services to help organizations store, process, analyze, and visualize their data. Here are some key Azure data management tools and services:

- Azure SQL Database: Azure SQL Database is a fully managed relational database service that offers built-in high availability, automated backups, and intelligent performance optimization. It supports both single databases and elastic pools for managing multiple databases with varying resource requirements.

- Azure Cosmos DB: Azure Cosmos DB is a globally distributed, multi-model database service designed for building highly responsive and scalable applications. It supports multiple data models including document, key-value, graph, and column-family, and offers automatic scaling, low-latency reads and writes, and comprehensive SLAs.

- Azure Data Lake Storage: Azure Data Lake Storage is a scalable and secure data lake service that allows organizations to store and analyze massive amounts of structured and unstructured data. It offers integration with various analytics and AI services and supports hierarchical namespace for organizing data efficiently.

- Azure Synapse Analytics: Azure Synapse Analytics (formerly SQL Data Warehouse) is an analytics service that enables organizations to analyze large volumes of data using both serverless and provisioned resources. It provides integration with Apache Spark and SQL-based analytics for data exploration, transformation, and visualization.

- Azure HDInsight: Azure HDInsight is a fully managed Apache Hadoop, Spark, and other open-source big data analytics service in the cloud. It enables organizations to process and analyze large datasets using popular open-source frameworks and tools.

- Azure Data Factory: Azure Data Factory is a fully managed extract, transform, and load (ETL) service that allows organizations to create, schedule, and orchestrate data workflows at scale. It supports hybrid data integration, data movement, and data transformation across on-premises and cloud environments.

- Azure Stream Analytics: Azure Stream Analytics is a real-time event processing service that helps organizations analyze and react to streaming data in real-time. It supports both simple and complex event processing using SQL-like queries and integrates with various input and output sources.

- Azure Databricks: Azure Databricks is a fast, easy, and collaborative Apache Spark-based analytics platform that provides data engineering, data science, and machine learning capabilities. It enables organizations to build and deploy scalable analytics solutions using interactive notebooks and automated workflows.

- Azure Data Explorer: Azure Data Explorer is a fully managed data analytics service optimized for analyzing large volumes of telemetry data from IoT devices, applications, and other sources. It provides fast and interactive analytics with support for ad-hoc queries, streaming ingestion, and rich visualizations.

These are just a few examples of the data management tools and services available on Azure. Depending on specific requirements and use cases, organizations can leverage Azure’s comprehensive portfolio of data services to meet their data management needs.

AWS: Data Management tools

Amazon Web Services (AWS) offers a variety of data management tools and services to help organizations collect, store, process, analyze, and visualize data. Some of the key data management tools and services provided by AWS include:

- Amazon S3 (Simple Storage Service): Amazon S3 is an object storage service that offers industry-leading scalability, data availability, security, and performance. It is commonly used for storing data for analytics, backup and recovery, archiving, and content distribution.

- Amazon RDS (Relational Database Service): Amazon RDS is a managed relational database service that supports several database engines, including MySQL, PostgreSQL, MariaDB, Oracle, and Microsoft SQL Server. It automates administrative tasks such as hardware provisioning, database setup, patching, and backups, allowing users to focus on their applications.

- Amazon Redshift: Amazon Redshift is a fully managed data warehouse service that makes it easy to analyze large datasets using SQL queries. It offers fast query performance by using columnar storage and parallel processing, making it suitable for analytics and business intelligence workloads.

- Amazon DynamoDB: Amazon DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance with seamless scalability. It is suitable for applications that require low-latency data access and flexible data models.

- Amazon Aurora: Amazon Aurora is a high-performance, fully managed relational database service that is compatible with MySQL and PostgreSQL. It offers performance and availability similar to commercial databases at a fraction of the cost.

- AWS Glue: AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easy to prepare and load data for analytics. It automatically discovers and catalogs datasets, generates ETL code to transform data, and schedules and monitors ETL jobs.

- Amazon EMR (Elastic MapReduce): Amazon EMR is a managed big data platform that simplifies the processing of large datasets using popular distributed computing frameworks such as Apache Hadoop, Apache Spark, and Presto. It automatically provisions and scales compute resources based on workload demand.

- Amazon Kinesis: Amazon Kinesis is a platform for collecting, processing, and analyzing real-time streaming data at scale. It offers services such as Kinesis Data Streams for ingesting streaming data, Kinesis Data Firehose for loading data into data lakes and analytics services, and Kinesis Data Analytics for processing and analyzing streaming data with SQL.

- Amazon Elasticsearch Service: Amazon Elasticsearch Service is a managed service that makes it easy to deploy, operate, and scale Elasticsearch clusters in the AWS Cloud. It is commonly used for log and event data analysis, full-text search, and real-time application monitoring.

These are just a few examples of the data management tools and services available on AWS. Depending on specific requirements and use cases, organizations can choose the most appropriate AWS services to meet their needs.

Data Science: Hypotheses

Way to formulate a question or an idea about something in a way that is statistically testable, through data collection, analysis, and inference.

Null hypothesis (H0): no effect

Alternative hypothesis (HA): an effect

Reject or fail to reject the null hypothesis

Basic Rules of Discrete Probability

In this reading, we’ll introduce discrete and continuous probability, walk through basic probability notation, and describe a few common rules of discrete probability.

Types of Probability

When working with probability in the real world, it’s common to see probability broken down into two categories: discrete probability and continuous probability.

Discrete Probability

Discrete probability deals with discrete variables – that is, variables that have countable values, like integers. Examples of discrete variables include the number of fish in a lake and the number of hobbies that an adult in the U.S. enjoys.

Therefore, the probability of discrete variables describes the probability of occurrence of each specific value of a discrete variable. As an example, the probability of there being exactly 142 fish in the lake. In another example, the probability of a particular person enjoying exactly 4 hobbies.

Each possible value of these discrete variables has its own respective non-zero probability of occurring in a dataset.

Continuous Probability

Continuous probability deals with continuous variables – that is, variables that have infinite and uncountable values. Examples of continuous variables include an individual’s weight and how long it takes to run a kilometer.

Similarly to discrete probability, the probability of continuous variables describes the probability of occurrence of each specific value of a continuous variable. However, these probability values are always close to zero for continuous variables – this is because of the infinite set of outcomes! Any individual outcome, like 2.0000001, is highly unlikely.

We’ll cover more on continuous probability later in this course, but we’ll focus on discrete probability for now.

Probability Notation

In order to learn the basics of discrete probability, it’s important to understand the basics of probability notation.

To symbolize the probability of a discrete event occurring, we use the following notation: P(A) = 0.5

This reads as “the probability of Event A occurring is equal to 0.5.” This can be interpreted as a 50 percent chance of Event A occurring.

Let’s consider a common real-world example: flipping a coin. Below, we write the probability of a flipped standard coin landing heads-up.

P(Heads) = 0.5

To shorten this, we will commonly represent the outcome (Heads) with a single letter. In this case, we are shorting Heads as a capital H.

P(H) = 0.5

Let’s take a look at an example with more possible outcomes: rolling a standard six-sided die. Each of the six outcomes are equally likely to occur when the die is rolled.

P(1) = P(2) = P(3) = P(4) = P(5) = P(6) = 1/6 or about 0.167.

It’s important to note that these examples are theoretical. They are based on what we know about coins and dice, rather than recording actual observations from the real world. In reality, the theoretical probability will most likely not be exactly the same as the actual number of times the event occurs. For example, we might roll a 4 slightly more often, or slightly less often, than 1 in 6 times. So based on recorded data, we might find: P(4) = # of rolls where 4 occurred / # of total rolls = 3/20 = 0.15

However, whether we are working with theoretical or recorded probabilities, the following must be true:

- The probability of each outcome must be between 0 and 1: 0 ≤ P(x) ≤ 1.

- The sum of the probabilities of each outcome must equal 1: PP(x) = 1.

Common Discrete Probability Rules

When we work with discrete probability, we’re often interested in the more complex questions than the likelihood of a single event occurring in a single trial or draw. Sometimes, we’re interested in the probability that one of a set of non-mutually-exclusive events occurs or in the probability that multiple events occur simultaneously.

In order to answer these questions, we need to understand some of the basic rules of discrete probability. Two common rules are the additive rule and the multiplicative rule.

Additive Rule

The additive rule states that for two events in a single-trial probability experiment, the probability of either event occurring is equal to the sum of their individual probabilities, as long as those events are mutually exclusive.

Consider the following theoretical examples from our coin and die examples:

P(H or T) = P(H) + P(T) = 0.5 + 0.5 = 1

P(1 or 4) = P(1) + P(4) = ⅙ + ⅙ = ⅓

This rule can be generally stated as:

P(A or B) = P(A) + P(B)

The above rule holds when the events are mutually exclusive, because both events cannot occur – a single coin flip can only bring up heads or tails, not both. And the single roll of a die cannot land on the number 1 and the number 4.

But what about if both events can occur? Let’s look at an example including a standard deck of cards. Assuming that we’re interested in the probability that a single drawn card is a king, denoted by K, or is of the heart suit, denoted by H.

The probabilities of each of these single events are below:

P(K) = 4/52

P(H) = 13/52

If these events were mutually exclusive, meaning there’s no card that is simultaneously a king and of a heart suit, we’d just add P(K) and P(H) together. However, there is a single card that is a king and of a heart suit.

Its probability is denoted below:

P(K and H) = 1/52

In order to correctly calculate the probability of a single drawn card being a king or of the heart suit, we need to subtract the probability of drawing the king of hearts card. This is because that card is counted as both part of P(K) and P(H) – we’re simply making sure to not count it twice.

P(K or H) = P(K) + P(H) – P(K and H) = 4/52 + 13/52 – 1/52 = 16 / 52

This rule can be generally stated as:

P(A or B) = P(A) + P(B) – P(A and B)

The additive rule can be used to help us understand the probability of a single event of a set of events occurring. Depending on whether the set of events are mutually exclusive from one another, we might need to subtract the probability that they both occur.

Multiplicative Rule

The multiplicative rule states that the probability of two events both occurring is equal to the probability of the first event occurring multiplied by the probability of the second event occurring, as long as those events are independent.

Let us combine our coin and die examples by using the multiplicative rule to compute the probability that a flip of the coin lands heads-up and the die lands with the number 4 facing up.

P(H and 4) = P(H) * P(4) = ½ * ⅙ = 1/12

This rule can be generally stated as:

P(A and B) = P(A) * P(B)

The above rule holds when the two events are independent of one another. The outcome of flipping a coin has absolutely no impact on the outcome of rolling a die.

However, if the events are not independent of one another, then the multiplicative rule states that the probability of two events both occurring is equal to the probability of the first event occurring multiplied by the probability of the second event occurring given that the first event occurred. This last condition accounts for the dependence of the two events.

This rule can be generally stated as:

P(A and B) = P(A) * P(B|A)

Let’s consider our playing card example. Assuming that you want to know the probability of drawing a card that is both a king and of the hearts suit. In order to determine this, you need to know the probability of a card being a king and the probability of the card being of the hearts suit given that it is a king.

These probabilities are denoted below:

P(K) = 4/52

P(H|K) = ¼

Because these events are not independent from one another, we need to multiply these two probabilities by one another to compute P(K and H).

P(K and H) = P(K) * P(H|K) = P(4/52) * P(¼) = 1/52

The multiplicative rule can be used to help us understand the probability of two events occurring. Depending on whether the set of events are independent from one another, we might use the probability of the second event occurring if the first event has occurred, too.

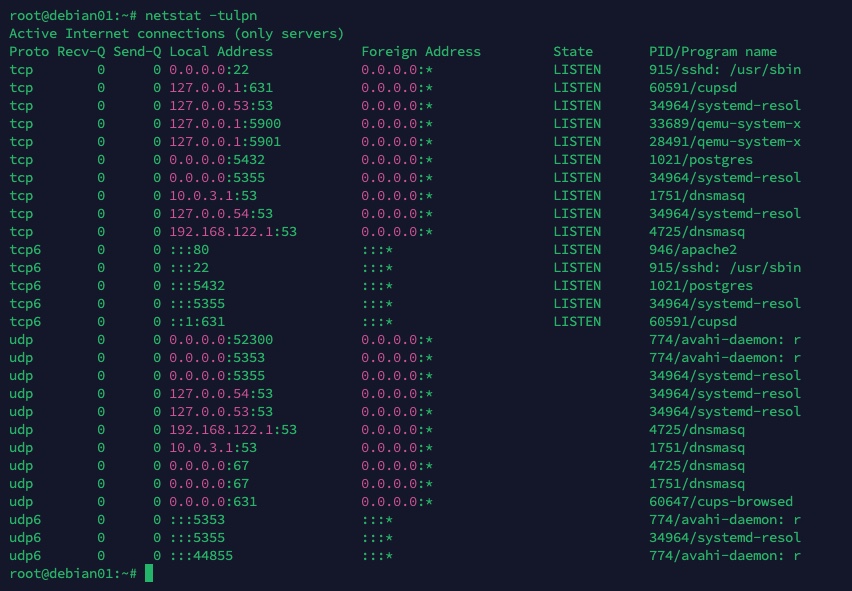

Linux: using netstat -tulpn

The netstat -tulpn command is used to display active network connections and their corresponding processes on a system. Here’s a breakdown of each option:

-t: Display TCP connections.-u: Display UDP connections.-l: Show only listening sockets.-p: Show the PID (process ID) and name of the program to which each socket belongs.-n: Show numerical addresses instead of resolving hostnames.

So, netstat -tulpn will list all TCP and UDP connections, show the listening sockets, display the associated process with each connection (along with its PID), and show numerical addresses instead of resolving hostnames. This can be useful for network troubleshooting and monitoring purposes.